The Hyper Text Transfer Protocol (HTTP), the fundamental application layer protocol that underlies the World Wide Web, has undergone significant transformations over the years to become a robust, secure, and efficient medium for digital communication. The evolution of HTTP is a testament to the relentless pursuit of innovation in the face of increasing demands from internet users.

To begin with, HTTP was originally conceived by Tim Berners-Lee, the pioneer of the World Wide Web, who designed the protocol with simplicity in mind to facilitate high-level data communication functions between web servers and clients. The first documented version of HTTP, dubbed HTTP0.9, emerged in 1991. This early iteration laid the groundwork for subsequent developments, including the official recognition of HTTP1.0 in 1996.

The next significant milestone came with the introduction of HTTP1.1 in 1997. While this protocol received little iterative improvements, it paved the way for more substantial advancements in the years to come.

In February 2015, the Internet Engineering Task Force (IETF) HTTP Working Group undertook a comprehensive revision of HTTP, giving rise to the second major version: HTTP/2. The new protocol was officially standardized in May 2015 in response to Google’s SPDY (Streamed Protocol for Data Exchange over Web) initiative.

Before diving into the intricacies of HTTP/2, it is essential to grasp the fundamental concept of a protocol. In essence, a protocol is a set of rules that govern data communication mechanisms between clients and servers. Protocols typically comprise three primary components: Header, Payload, and Footer.

The Header serves as a container for information such as source and destination addresses, size, and type of Payload. The Payload itself contains the actual transmitted data. Finally, the Footer functions as a control field to ensure error-free transmission by routing client-server requests to intended recipients in conjunction with the Header.

To illustrate this concept, consider the analogy of postal services. A letter (Payload) is inserted into an envelope (Header) bearing destination information and sealed with glue and postage stamp (Footer) before being dispatched. While transmitting digital information via 0s and 1s may seem complex, it demands innovative solutions in response to technological advancements driven by the explosive growth of internet usage.

In its original form, the HTTP protocol consisted of basic commands: GET, which retrieves information from a server, and POST, which delivers requested data to a client. This simple yet effective set of commands formed the foundation for constructing other network protocols as well.

The Need for Improvement in User Experience and Effectiveness

The evolution of the World Wide Web has been marked by a steady stream of innovations, driven by the need to improve user experience and effectiveness. The iteration of this process is the development of HTTP/2, a revised protocol designed to enhance online presence through enhanced performance and security features.

Goal of Creating HTTP/2

Since its inception in the early 1990s, HTTP has seen only a few major overhauls. The most recent version, HTTP1.1, has served the cyber world for over 15 years. However, as web pages have become more dynamic, with resource-intensive multimedia content and excessive reliance on web performance, old protocol technologies have been relegated to the legacy category.

The primary goal of developing a new version of HTTP centers around three qualities rarely associated with a single network protocol without necessitating additional networking technologies – simplicity, high performance, and robustness. These goals are achieved by introducing capabilities that reduce latency in processing browser requests, such as multiplexing, compression, request prioritization, and server push.

Mechanisms such as flow control, upgrade, and error handling work as enhancements to the HTTP protocol for developers to ensure high performance and resilience of web-based applications.

The collective system allows servers to respond efficiently with more content than originally requested by clients, eliminating user intervention to continuously request information until the website is fully loaded onto the browser. For instance, the Server Push capability with HTTP/2 allows servers to respond with a page’s full contents other than the information already available in the browser cache.

Efficient compression of HTTP header files minimizes protocol overhead to improve performance with each browser request and server response. If you are unable to understand these terminologies then don’t worry we will discuss all these features of HTTP/2 in detail.

What Was Wrong With HTTP1.1?

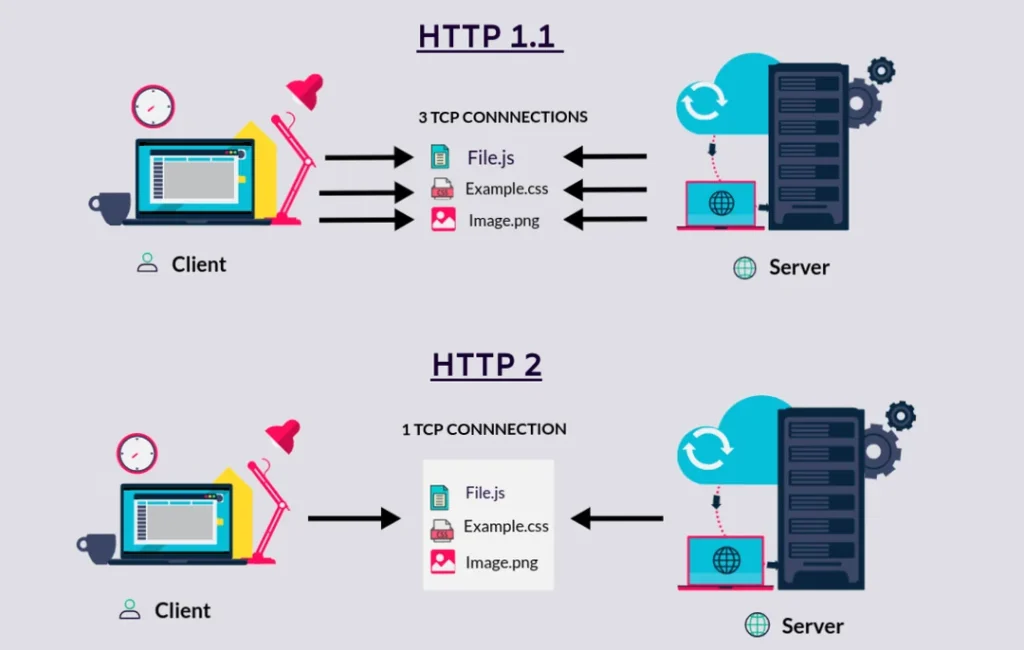

HTTP1.1 was limited to processing only one outstanding request per TCP connection, forcing browsers to use multiple TCP connections to process multiple requests simultaneously.

However, using too many TCP connections in parallel leads to TCP congestion that causes unfair monopolization of network resources. Web browsers using multiple connections to process additional requests occupy a greater share of the available network resources, hence downgrading network performance for other users.

Issuing multiple requests from the browser also causes data duplication on data transmission wires, which in turn requires additional protocols to extract the desired information free of errors at the end-nodes.

The internet industry was naturally forced to hack these constraints with practices such as domain sharding, concatenation, data inlining, and spriting, among others. Ineffective use of the underlying TCP connections with HTTP1.1 also leads to poor resource prioritization, causing exponential performance degradation as web applications grow in terms of complexity, functionality, and scope.

The web has evolved well beyond the capacity of legacy HTTP-based networking technologies. The core qualities of HTTP1.1 developed over a decade ago have opened the doors to several embarrassing performance and security loopholes.

The Cookie Hack, for instance, allows cybercriminals to reuse a previous working session to compromise account passwords because HTTP1.1 provides no session endpoint-identity facilities. While similar security concerns will continue to haunt HTTP/2, the new application protocol is designed with better security capabilities such as the improved implementation of new TLS features.

HTTP/2 Changes

The changes introduced by HTTP/2 are designed to maintain interoperability and compatibility with HTTP1.1. The advantages of HTTP/2 are expected to increase over time based on real-world experiments, and its ability to address performance-related issues in real-world comparison with HTTP1.1 will greatly impact its evolution over the long term.

It is important to note that the new HTTP version comes as an extension to its predecessor and is not expected to replace HTTP1.1 anytime soon. HTTP/2 implementation will not enable automatic support for all encryption types available with HTTP1.1, but definitely opens the door to better alternatives or additional encryption compatibility updates in the near future.

HTTP/2 Multiplexed Streams

Multiplexed streams are a key feature upgrade in HTTP/2, allowing multiple bi-directional sequences of text format frames to be exchanged between client and server simultaneously. This is in contrast to earlier iterations of the HTTP protocol, which could only transmit one stream at a time with significant latency between each stream transmission.

The problem with individual streams is that they can be inefficient and resource-consuming when receiving large amounts of media content. HTTP/2 addresses this concern by introducing a new binary framing layer that allows client and server to disintegrate the HTTP payload into small, independent, and manageable interleaved sequences of frames. This information is then reassembled at the other end.

The benefits of multiplexed streams are numerous:

- Parallel requests and responses do not block each other.

- A single TCP connection is used to ensure effective network resource utilization despite transmitting multiple data streams.

- No need for optimization hacks, such as image sprites, concatenation, and domain sharding, which can compromise other areas of network performance.

- Reduced latency, faster web performance, and better search engine rankings.

- Reduced OpEx and CapEx in running network and IT resources.

HTTP/2 Server Push

Another key feature upgrade in HTTP/2 is the capability to push additional cacheable information from the server to the client that is not requested but is anticipated in future requests. For example, if the client requests a resource X and it is understood that the resource Y is referenced with the requested file, the server can choose to push Y along with X instead of waiting for an appropriate client request.

The client places the pushed resource into its cache for future use. This mechanism saves a request-response round trip and reduces network latency. Server Push was originally introduced in Google’s SPDY protocol.

Stream identifiers containing pseudo-headers such as :path allow the server to initiate the push for information that must be cacheable. The client must explicitly allow the server to push cacheable resources with HTTP/2 or terminate pushed streams with a specific stream identifier.

Other benefits of Server Push include:

- The client saves pushed resources in the cache.

- The client can reuse these cached resources across different pages.

- The server can multiplex pushed resources along with originally requested information within the same TCP connection.

- The server can prioritize pushed resources, which is a key performance differentiator in HTTP/2 vs HTTP1.

- The client can decline pushed resources to maintain an effective repository of cached resources or disable Server Push entirely.

- The client can also limit the number of pushed streams multiplexed concurrently.

HTTP/2 Binary Protocols

HTTP/2 version of HTTP has evolved significantly, and one attribute is that it transforms from a text protocol to a binary protocol. HTTP1.x used to process text commands to complete request-response cycles. HTTP/2 will use binary commands (in 1s and 0s) to execute the same tasks.

This attribute eases complications with framing and simplifies implementation of commands that were confusingly intermixed due to commands containing text and optional spaces.

Although it may take more effort to read binary as compared to text commands, it is easier for the network to generate and parse frames available in binary. The actual semantics remain unchanged. Browsers using HTTP/2 implementation will convert text-based protocols into binary formats for faster processing and implementation.

The benefits of binary protocols include:

- Compact representation of commands for easier processing and implementation.

- Efficient and robust in terms of processing data between client and server.

- Reduced network latency and improved throughput.

- Eliminating security concerns associated with the textual nature of HTTP1.x, such as response splitting attacks.

HTTP/2 Stream Prioritization

HTTP/2 also allows the client to provide preference to particular data streams. Although the server is not bound to follow these instructions from the client, the mechanism allows the server to optimize network resource allocation based on end-user requirements.

Stream prioritization works with Dependencies and Weight assigned to each stream. Although all streams are inherently dependent on each other except for one or two exceptions, the dependent streams are also assigned weight between 1 and 256. The details of stream prioritization mechanisms are still debated, but the concept itself is an important feature upgrade in HTTP/2.

HTTP/2 Authorized

As a built-in security mechanism, the server must be authorized to Push the resources beforehand. This ensures that the pushed data stream is prioritized and multiplexed accordingly, ensuring better transmission performance as seen with other request-response data streams. The HPACK algorithm allows HTTP/2 to circumvent the prevalent security threats targeting text-based application layer protocols. HTTP/2 contains commands in binary, enabling compression of the HTTP header metadata in following a ‘Security by Obscurity’ approach to protecting sensitive data transmitted between clients and servers.

Conclusion

From the perspective of online businesses, the web is getting slower as it gets populated with increasing volumes of irrelevant media-rich content. For online businesses to reach their target market effectively and for internet users to access better web content faster, HTTP/2 changes are developed to enhance efficiencies in client-server data communication. And on top of that, the web is more situational than ever.

Internet speed is not the same across all networks and geographic locations. The increasingly mobile user-base demands seamless high-performance internet across all device form factors, even though congested cellular networks can’t compete with high-speed broadband internet. A completely revamped and overhauled networking and data communication mechanism in the form of HTTP/2 emerged as a viable solution with the following significant advantages.

The term “web performance” sums up all the advantages of HTTP/2 changes.

In conclusion, HTTP/2 is an innovative solution that offers numerous advantages over its predecessors. With its improved performance, security, and user experience, it has the potential to revolutionize the way we interact with the web. Online businesses and internet consumers alike stand to benefit from this revolutionary technology.

Hi there to every body, it’s my first go to see of this weblog; this weblog carries amazing and genuinely excellent material in support of visitors.

thank you!